Building a Multi-Agent RAG System for Technical Learning

Learning new tech usually starts with finding solid, well-structured resources that actually make sense to you. I ended up building an app that uses AI agents to go through technical books and other materials, breaking them down into clearer, bite-sized chapters. At first, I just wanted to mess around with LangGraph and a few AI tools, but over time the project turned into something bigger—a space where I could bring together my interests in software, AI, and system design.

I’ve been chipping away at this project on weekends for about a year. It started out as me just playing around with LangGraph to see what this whole graph-based approach was all about. After a few months, things started to click and I decided to give the whole system some structure.

System at a Glance

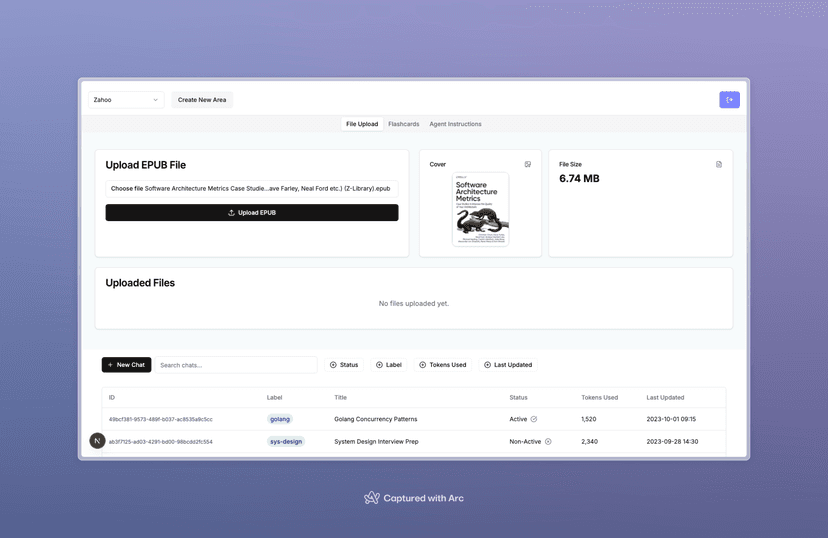

At its core, the app is a multi-agent, retrieval-augmented chat that turns technical books into interactive study modules. A LangGraph-powered state machine coordinates the AI agents, and FastAPI exposes all that to a Next.js frontend.

Python and FastAPI felt like the right fit—mostly because the ML and LLM ecosystem is so Python-heavy. Even though I’d been using Go more at work, Python's quick prototyping and solid libraries won me over pretty fast.

I started this project because traditional learning methods just weren’t cutting it for me as a developer. A lot of structured resources felt repetitive—I kept running into stuff I already knew. Once I began experimenting with AI, I realized LLMs could help break down dense technical material into simpler, interactive chunks automatically.

What started small quickly snowballed. Every time I had a new idea, it felt worth building—classic case of feature creep. That made things interesting (and a bit chaotic), especially since I was playing PM, developer and obviously stakeholder 😂

In the end, the app turned into more than just a learning tool. It became my playground for trying out ideas around modularity, architecture, and system design—hands-on stuff that’s directly improved how I build software.

One of my main goals was to keep the architecture flexible by decoupling things as much as possible. I used Poetry to manage dependencies and split the backend into separate packages so I could isolate the core logic (like how the app talks to LLMs) from the API layer. That way, switching frameworks—or even languages—down the road wouldn’t be a nightmare.

Working with multiple backend packages gave me a good feel for modern systems design. I learned how to manage different components—like the database, vector store, and LangGraph for agent coordination—while keeping the system modular and cohesive. Docker Compose made it super easy to spin everything up locally, so I could focus more on building features than wrangling setup scripts.

I also played around with real-time data delivery using WebSockets and Server-Sent Events (SSE). Both were fun to experiment with, though pushing large or complex data over sockets could get a bit messy. Still, it beat the constant polling approach in terms of performance and responsiveness.

If you're curious about the nitty-gritty—like how EPUB parsing works, how embeddings are handled, or how the state machine is set up—check out the README. I’ve documented most of it there.

Outcome & Learnings

I kicked this off in the summer of ’24 and ended up learning a ton—especially about how fast the LLM ecosystem moves. Tools and libraries were shifting constantly, which gave me a lot of room to experiment. One thing I’d do differently next time is go even harder on modularity right from the start—it just makes evolving the project way easier. I also found myself getting really into data parsing (especially EPUBs), so who knows—maybe the next project will be something like video editing with ffmpeg.